Introduction

With every continued release of GPU SDKs (System Developer Kits) NVIDIA and AMD are enabling mainstream GPGPU applications. As the SDKs become easier to use and more code friendly, developers are finding new and innovative ways of leveraging these massively parallel processing powerhouses that normally sit idle collecting desktop dust. Currently the majority of the adapters utilizing this technology is the scientific community and the medical community. Two primary vendors are competing for the lion share of the GPU market to include NVIDIA and AMD. NVIDIA uses CUDA (Compute Unified Device Architecture) to implement their parallel processing while AMD uses OpenCL (Open Computing Language). While the GPU processors can handle instruction passed via the API, only certain forms of instruction will benefit from the “stream processor” on the GPU.

With every continued release of GPU SDKs (System Developer Kits) NVIDIA and AMD are enabling mainstream GPGPU applications. As the SDKs become easier to use and more code friendly, developers are finding new and innovative ways of leveraging these massively parallel processing powerhouses that normally sit idle collecting desktop dust. Currently the majority of the adapters utilizing this technology is the scientific community and the medical community. Two primary vendors are competing for the lion share of the GPU market to include NVIDIA and AMD. NVIDIA uses CUDA (Compute Unified Device Architecture) to implement their parallel processing while AMD uses OpenCL (Open Computing Language). While the GPU processors can handle instruction passed via the API, only certain forms of instruction will benefit from the “stream processor” on the GPU.

Application Support

With the passing of every month GPGPU capabilities are advancing in the open source labs and finding their way into the larger software development houses.

Popular GP/GPU applications:

- Video Processing

- Scientific/Research

- Folding@Home (Distributed Computing to understand Protein Folding)

- Seti@Home

- MATLAB

- Weather Forecasting

- Molecular Modeling

- Medical

- CT Reconstruction

- Medical Imaging

- Cryptography

- Deepbit.net / BitcoinCZ / Mt.Red (Bitcoin Mining)

- oclHashCat-plus (Password Recovery/Cracking)

- Benchmark Apps

- ShaderToyMark (Heavy pixel shader OpenGL benchmark)

- FurMark (Intense OpenGL benchmark burn-in/stress test)

- FluidMark (NVIDIA – PhysX fluid simulation)

- Utility

- GPU Caps Viewer (OpenGL/OpenCL configuration, capabilities and other details)

- GPU Shark (GPU monitoring utility for NVIDIA/AMD)

- GPU Toolkit (Bundle of GPU utilities from Geeks3D)

- Monitoring

- AMD System Monitor (Slick monitoring app for AMD chipset)

- TMonitor (Accurate graph with high refresh rate for individual cores)

- HWMonitor (Hardware monitoring app providing specifics on all aspects of system hardware)

Considerations for the GPU System Build

There are a few things to consider when planing for a GPGPU build. Primarily what is the purpose of your build? Do you have the intention of only running one application or do you prefer keeping the build a little more general purpose. If you are building for a single purpose check the requirements of your application thoroughly. In the case that your application only supports the CUDA drivers, NVIDIA hardware will be your only option. Otherwise you might consider the architecture of the application. Was it optimized for for a CUDA style card or an AMD?

NVIDIA Versus AMD?

If you have an option between CUDA and OpenCL for your GPGPU application you can consider options like pricing, card sizing, heat output, processor speed versus processor count. Generally speaking read the recommended configuration or best practices for your application and follow their recommendations. If there is not a clear choice between one card or the other Nvidia typically runs hotter due to the higher clock rates in their cards while the AMD has a greater number of processors that run at lower frequencies. If your application is enhanced by a greater degree of parallelism the AMD boards may be more advantageous for example.

How Many GPUs?

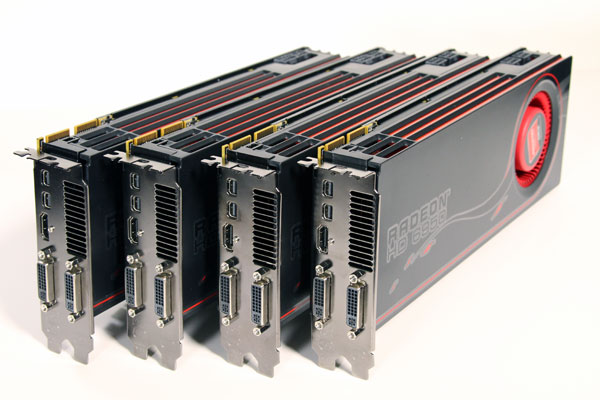

If your application (and operating system) support more than one GPU, you have a few options. Consider available space inside your system case, available motherboard slots, spacing between GPUs, airflow through the case, airflow through GPUs, required bus bandwidth per GPU, power requirements, and noise level.

Space Requirements

It goes without saying that these cards can get quite large and heavy, therefore a good plan for physical card configuration will save you a headache or two. For one card most standard size desktop cases will suite your purpose and maybe have room for a second card. If unsure look at the stats for your video card and take some measurements inside your case just to be safe. Common sizing problems arise from the video card extending past the motherboard and butting up against case structure or hard drives. Miscellaneous cabling or a bottom mounter power supply could interfere too. If using more than one card in a desktop case I highly recommend a case configured for maximum ventilation and airflow. Well this will suffice for a desktop gaming rig I would recommend a completely different configuration for a dedicated GPGPU / HPC build. If this system is going to be dedicated for GPGPU / HPC, it should be built using an open case design. This approach solves many problems. It address the heat buildup created from the GPU heat envelope, reduces overall build costs, provides for easier maintenance, and allows for a custom external cooling solution.

Motherboard Layout

If seeking a higher level of processing density consider a motherboard with four PCIe x16 connectors. There are a few motherboards that support this configuration, but care must be taken to provide the proper cooling. Motherboards supporting a 4-way GPU configuration include:

Popular Motherboards for Bitcoin(BTC) or Crypto-Currency Builds

Note the bandwidth requirements per GPU for your GPGPU application. If bandwidth requirements are high give additional consideration to the slot performance so you don’t bottleneck your HPC build.

Power Requirements

Review the maximum power requirements for the purchased GPU*(n) and additional hardware components to ensure you are under the 80% maximum ratting of the power supply you plan to purchase. You may be pushing these GPUs hard for long periods of time. Avoid overtly stressing your power supply by running too close to the rated output. You may be concerned about the power bill if running a dense configuration, and you should be. The average system by today’s standards will consume about 150W (idle) without the GPU. Add in a Raedon 6970 and that will boost your power consumption by another 200W per GPU for a grand total of ~350W with the GPU at 97% utilization and fan at 80%. Dig up a recent electrical bill and calculate the price you pay per kW/h. In my case I paid $0.11/hr. The formula is ( <Watts Used> / <1 kilowatt hour> ) * <price per kWh> * <hours per day> = <cost>. So if I paid $0.11 for a kW/h of electricity and ran one GPU for 24 hours the calculation would be ( 350W / 1000W) * $0.11 * 24 = $0.924. So I would pay nearly $1 a day to run one GPU flat out for 24 hours. Every situation will be different, but at least this gives you an idea of what to expect.

Power Monitoring Options:

|

|

|

Cooling and Airflow

If you have a server room at your disposal, problem solved. Otherwise you will want to consider your placement carefully. Under full load your build will be quite noisy and output tremendous amounts of heat. One of these builds will make a moderate sized bedroom uncomfortable in no time at all. If you close the door to the room you will be dealing with heat buildup issues. If you don’t close the door to the room your A/C could be running frequently depending on thermostat location. A very viable option to solving many of these issues is to locate the GPGPU System to a patio or garage environment. This will generally solve your noise and cooling issues at the same time. For those who might develop a nervous twitch at the thought of placing a server/PC in an outdoor environment I invite you to read a study from Intel about a cooling and failure rate test they conducted. Quite fascinating. It convinced me and I was reassured after several months of solid performance, cooling, and silence. The end result was that I placed my two GPGPU systems in my garage on a shelf. To allow for air circulation I cracked my garage door about 4 inches. This created ventilation at the top of my garage door for the GPU heat to escape and allowed for cooler fresh air near the ground to enter the garage and circulate. To improve the circulation I placed two box fans inside the garage door opposite the HPC setup which I noted had a significant impact on cooling. In the end the two systems actually ran 14 degrees Centigrade cooler than when they were located inside the house directly under an A/C vent. Noise eliminated <check>. A/C run-time reduced <check>. Cooler setup <check>. Concerning card placement and spacing pay close attention to the spacing between the GPU cards. If you are placing the boards back-to-back, you will need to use spacers to create air gaps, prevent unnecessary vibration, and prevent sparking or shorts. Significant cooling gains can be achieved by giving the top three cards room to breath. If the card is fully enclosed and utilizes a blower style fan then spacing is going to help. I found that synthetic cork works best for this task. Do not use regular cork due to its crumbling nature. Cut the synthetic cork to create three equal spacers between your four GPU boards and you will be in great shape. This spacing technique does not work if your cards are mounted in a case, or they are not placed next to each other.

Noise Issues

These systems are going to be loud. There is not a whole lot one can do except isolating the system between you and a sound barrier. For example by placing the system in a server room or a garage (home) you can eliminate the noise burden.

Headless Configuration?

A headless configuration (server without a monitor) is an efficient way of doing business, conditions permitting. Being able to deploy a system using a headless configuration is largely dependent on the operating system and network access. Most likely the system will be networked if being used for HPC purposes so the OS is your next major concern. Linux/UNIX make it quite easy to configure a system for headless operation, but attempting this with Windows may not be worth your effort. A side note concerning Windows: If you are using multiple GPU boards a monitor must be plugged into every board to properly function as a usable GPU. The only other option would be a transistor hack or VGA Dummy Plug on the DVI port. It comes down to an issue with Windows disabling the GPU because it does not detect a monitor, so you have to fake the existence.

Operating system?

What platform does the application support? Would you like to run a headless configuration? Black box configuration with full automation was ideal in my circumstance. In my situation I wanted to avoid downtime, automate application start up, monitor GPU vitals, and run headless. The obvious choice for me was Linux (Ubuntu specifically due to its robust GPU support). Remember you really are bound to the application requirements, so if you have the choice consider how you would like to manage the system.

Monitoring Vitals

Right off the bat I knew SNMP (Simple Network Management Protocol) or e-mail alerts would provide me with as much or little information as I desired. I was primarily interested in graphing GPU temperatures throughout the day and monitoring bandwidth. Cacti, a popular front end for use with SNMP, was already implemented on one of my systems so I just added my two GPGPU compute units to the graph set.

Conclusion

Generally speaking a project of this nature is not to be taken lightly. The power consumption of the Graphics Processing Units and the noise output in turn are enough to dissuade most individuals from tackling this project with a casual goal in mind. If you don’t have access to a server room, or remote area of your house (like a garage) then I would suggest a much lighter build. Your significant other might take issue with a small jet engine running in the adjoining bedroom.

The positive side of things would be an incredibly powerful system limited only by compatible software and one’s penchant for pushing the boundaries. Don’t forget about the awesome bragging rights to your fellow geek buddies, because this thing is a powerhouse!

Additional Resources